General §

rir:Checkbox#todo Exam IL §

rir:PencilExam §

- 30.03.21 probably in person exam

- Reference book EXTREMELY important for overall understanding

- 50/50 Bookwork and Open-Ended questions

- Bookwork: Specific questions about lectures

- Open Ended: Imagine that… Find solution for…

rir:TimeOld exam questions §

- Basics

- List 3 examples of lexical ambiguity

- Write down a sentence that matches the RegEx

- Why use tf in if.idf?

- Assign POS-Tags to this sentence

- What does a tokenizer might have when processing a ”.”

- Why did sparse word embeddings become so popular

- More applied knowledge

- Develop a grammar that

- Accepts x

- Rejects y

- Extend it, so that it also accepts other sentences like z

- -> add more high lv rules

- How would a chart parser parse x

- Applications (demonstrating knowledge)

- Build word processor, explain how it would predict

- Motivation for smoothing?

- Implications of Zipf’s law? Larger corpus -/-> better predictions

- How to evaluate the word processor

rir:StackModule overview §

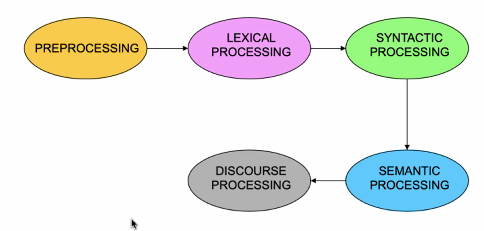

- NLP as a pipeline

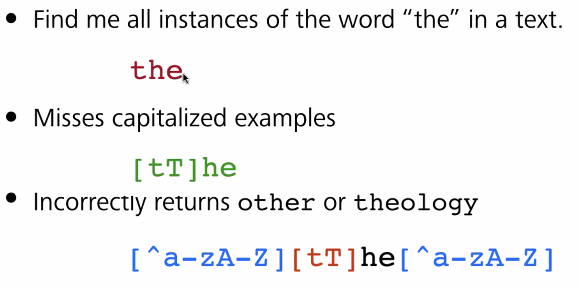

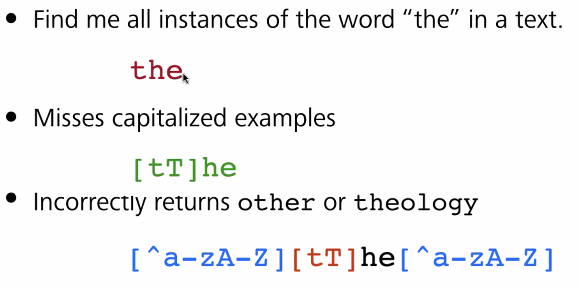

- RegEx, Automata

- Automata

- Regex

- Good quick n dirty solution

- Errors

- Type 1 false positive

- Increase accuracy/precision

- Type 2 false negative

- Text normalization

- First step in all NLP approaches

- Language modeling

- Sequence of tokens/tags used to model a language

- Predictions are often a choice

- -> Predict most likely with statistical approach

- Markov Assumption

- POS-Tagging is a basic NLE application

- = assigning Tags to words

- Combine frequency and contextual information

- Frequency: How common is Token t

- Context: How likely is this (Bi)gram

- Text classification

- Assign category to text

- Naive Bayes

- Vector semantics

- Vectors used to represent words and their relationships/meaning

- Nearby vectors = similar words

- Knowledge-Driven approach

- Data-Driven approach

- Embeddings, get info from corpus

- Tf-idf

- = sparse vectors

- count nearby words

- Word2Vec

- = dense vectors

- learn by training

- Calculate cosine, then similarity

- Evaluating

- Extrinsic

- Is the result better than …?

- Intrinsic

- Are the processes better than… ?

- BERT

- Sparse, contextual

- Trained similar to word2vec

- Formal Grammars

- Capturing structure of natural languages

- Parsing to map input depending on Grammar

- Top Down

- Bottom Up

- Chart parsing

- Probabilistic Parsing

- Semantics

- Required: Representation, Meaning of word/phrase, logical form for result

- Application Areas (VL12 Notes IL)

- Question answering system

- Chatbots

- Rule-Based Vs. ML-Based Vs. Hybrid

- Industry Vs. Academia