Some thoughts on OpenAI.

ChatGPT

- OpenAI was surprised by its success1

- in fact, the hype train it created was so big, that it single-handedly re-ignited the hype of the whole AI industry

- They reacted well and jumped on the train

- Sam Altman took the chance and transformed OpenAI from a non-profit research lab into a full-blown product company

- The transition was turbulent, shifting identity and goals

- Ultimately, Sam Altman and his team succeeded, pushing out opposing parties

- ChatGPT is now a well-known product

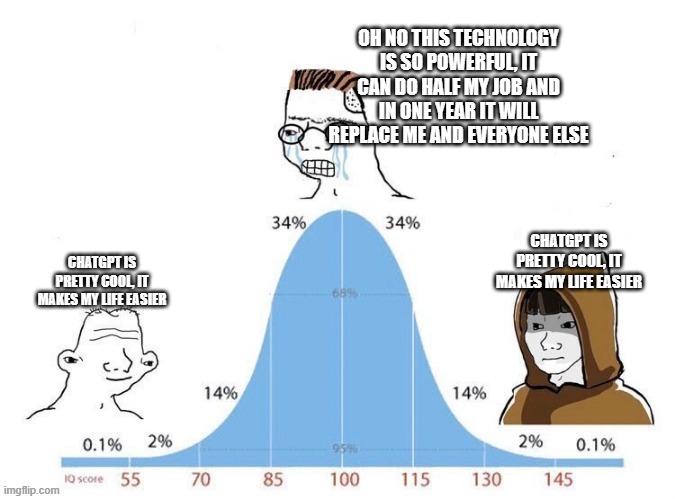

- Consumers see it as a smart chatbot that boosts productivity

- However, consumers don’t understand the technology behind it

- To them, it’s a magical black box capable of creating images, writing code, answering questions, etc.

- In reality, it’s many parts working together seamlessly

- OpenAI intentionally obfuscates ChatGPT’s workings to:

- Avoid giving competitors an advantage

- Make ChatGPT seem like magic

- This fuels fears about AGI

- People worry about job security, the future, and AI dominance

- i.e. it’s the best advertising they could wish for (see AGI)

- This fuels fears about AGI

Limits

- OpenAI (and everyone else) is running out of training data for GPTs2

- Still, I don’t think that training data is the biggest problem, as there will probably be other ways of optimizing and improving models

- However, these improvements will take more time and effort than ‘just’ training a model with more data

- I also doubt one could reach any form of strong AI, which would pose the threat of Singularity using the GPT approach

- Training GPT models with human artifacts will approximate performance, not exceed it

- don’t get me wrong, the current tech is indeed a major breakthrough

- it single-handedly dropped the cost of (low-medium) intelligence

- with that drop in cost, there will be major movements in the economy (and ones every day life)

- I even built multiple projects myself using the OpenAI API myself, it’s great

- however, its progress does not scale

- they can’t just ‘train a new model longer, with more data’ to make it twice as smart forever

- that’s their problem

- Energy consumption and economic viability will also limit GPTs’ success

- Currently, VC funding covers costs

- Investors will eventually expect returns

- OpenAI’s current pricing and free offerings won’t last forever3

- When costs rise, excitement and expectations for GPTs will decrease

AGI

- OpenAI does not have a master plan

- It’s just a rapidly growing startup riding a wave of success from an experiment

- Initially, they aimed to build AGI

- This goal remains distant (see Limits)

- Instead, they are currently riding the ChatGPT hype wave

- They must maintain this momentum to avoid failure

- They do this by:

- Indirectly fearmongering about AGI

- Obfuscating product workings to make them seem magical

- Trying to monopolize popular AI terms to direct hype and energy towards them4

- Generating revenue through investments and building successful products atop ChatGPT

- They do this by:

- They must maintain this momentum to avoid failure

- Furthermore, the pressure created by the ChatGPT hype squashed their (public) timeline of achieving AGI

- This is evident in Sam Altman’s interviews, pre- and post-ChatGPT

- Pre-ChatGPT, interviews were informative and targeted at experts, with AGI being a distant goal

- Post-ChatGPT, interviews feel like pop-science

- The focus is on grand visions of an AGI-driven world

- These ‘interviews’ are disguised advertisements

- Targeting well-educated audiences

- Assuming AGI (created by OpenAI) as an inevitable future

- Portraying OpenAI as an open research lab on the brink of achieving AGI, inviting public participation through prototype testing

- In reality, they are a for-profit company focused on product creation and revenue

- They rely on the research lab image to function

- Therefore, concerns about AGI or ethics are often just marketing

- It’s similar to how Nike sells sportswear, making consumers feel like high-performance athletes through advertisements

Competition

- OpenAI dislikes competition

- As noted in ChatGPT, they aim to create a monopoly

- They want ‘OpenAI’ to be synonymous with ‘AI’

- They hinder smaller competitors by calling for AI regulations5

- To make regulators move faster, they pull out their good old ‘AGI is coming’ trump card

- These regulations would hurt OpenAI but devastate smaller competitors

Drama

- OpenAI’s transformation has generated much noise

- This drama feels like a reality show for Zoomers

- It attracts media attention, creates discussions, and emotions

- This isn’t necessarily bad for OpenAI, as they benefit from the attention (and attention is all you need after all ;) )

- Important information remains inside the company through lifetime NDAs6

Links

Other

- OpenAI researchers warned board of AI breakthrough ahead of CEO ouster | Hacker News (ycombinator.com)

- After OpenAI’s blowup, it seems pretty clear that ‘AI safety’ isn’t a real thing | Hacker News (ycombinator.com)

- OpenAI’s Long-Term AI Risk Team Has Disbanded | WIRED

Footnotes

-

OpenAI Cofounder Surprised by ChatGPT’s Massive Success (businessinsider.com) ↩

-

OpenAI is too cheap to beat | Hacker News (ycombinator.com) ↩

-

OpenAI – Application for US trademark “GPT” has failed | Hacker News (ycombinator.com) ↩

-

OpenAI Lobbied the E.U. To Water Down AI Regulation | Hacker News (ycombinator.com) ↩

-

OpenAI departures: Why can’t former employees talk? | Hacker News (ycombinator.com) ↩